Where’s your (over)head at? Pt I

The first in a two-part series outlining how to think about data processing code in the Python world. This first article evaluates whether Python is the right tool for you, and what to consider before embarking on a new data processing project.

TL;DR This is the first in a two-part series of articles outlining how to think about data processing code in the Python world, and more specifically, how we can improve our code performance while writing legible, maintainable, production-quality code.

In this first article, we’re going to evaluate whether Python is the right tool for your use-case, and what you should consider before embarking on a new data processing project.

.jpg)

The Trade-off

When benchmarked against other languages, Python consistently ranks toward the lower end of the performance spectrum. In a world where speed is often-times king, it begs the question, why is it such a popular language? The answer to this question is multi-faceted, but one of the core reasons is that Python has been designed, and purpose-built, with the intention of being easy to use.

Compared to other languages, writing Python code tends to require less thinking on the developer’s behalf, when it comes to how the program manages underlying concepts like data types and memory. What this illustrates, is a very fundamental trade-off when it comes to software engineering, namely, where is our code placing the majority of its overhead, is it on the machine, or on the developer?

Python utilises (amongst many other design features) abstractions, grammar, and syntax which make the language fast to learn, easy to read and write, and syntactically simple to understand.

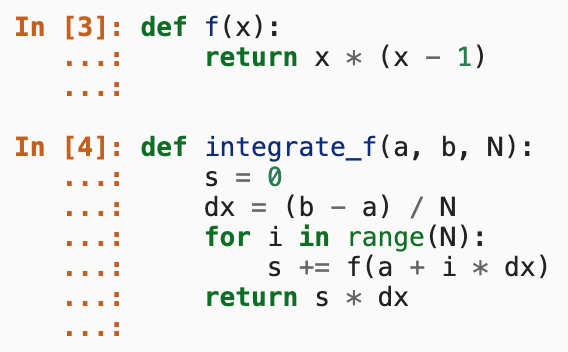

To illustrate this, let’s have a look at a snippet of pure Python code, taken from the Pandas documentation.

We see 2 functions written in pure Python, which, in terms of our aforementioned trade-off, are a staunch example of the former. The code is clean, concise, and simple. The syntax is arguably as close to pseudo-code as we are going to find in a functioning programming language. Notice how the developer does not have to think much about the likes of variable types, pointers, references, or memory management.

However, when our pure functions are applied to a large set of data, the performance is going to be sluggish. Part of the reason is that all of these aforementioned concepts, which the developer does not need to explicitly handle, still exist in Python. They are simply hidden from the developer and pushed down on to the machine (or in this case, the Python interpreter). This is one part of the reason why Python code tends to be comparatively slow to run; the machine has to do more thinking on the developer’s behalf.

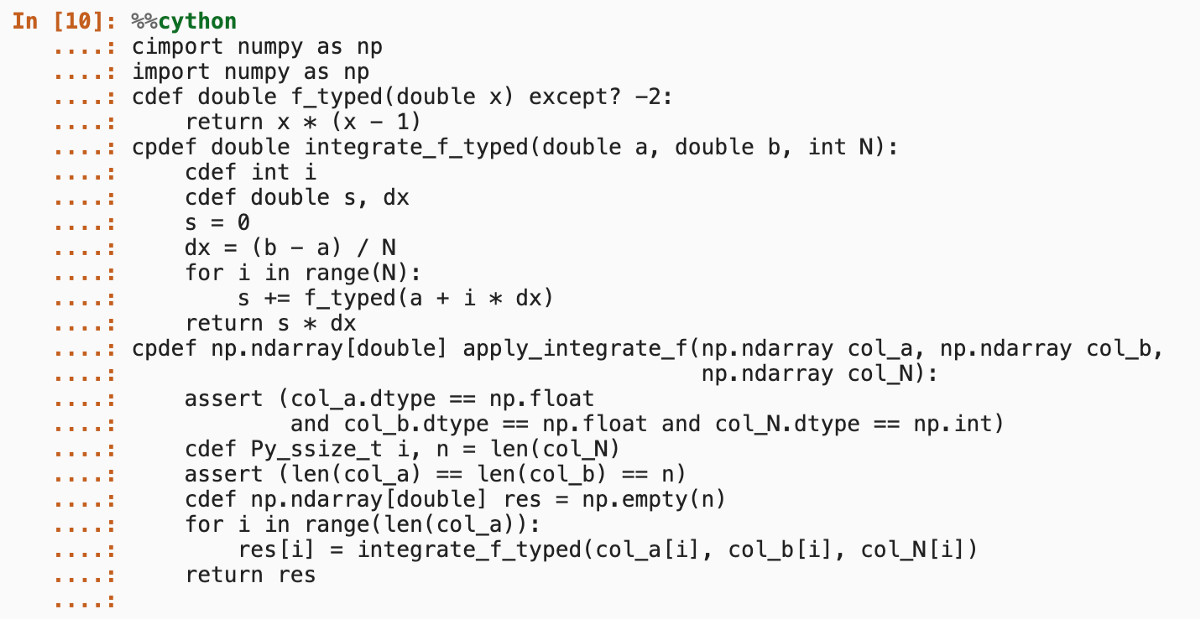

This second snippet (taken from the same documentation), shows the same 2 functions we have previously seen, now implemented in Cython, with the addition of a third function (apply_integrate_f) which optimises how our previous functions can be applied over a large array of values. This code is highly performant, however, we immediately notice that this code is far more verbose and confusing. The developer is now forced to think about the likes of type annotations, assert statements, and somewhat alien constructs such as the Py_ssize_t object. It is going to be significantly more challenging to read, write, and iterate on when compared to our base Python code, and as such, places more overhead on the developer.

Assess the alternatives

Writing the same functions which we saw previously, in a language like Kotlin, Rust, C++, or Go would certainly yield better performance than the pure Python code, and in most cases be clearer than the unruly Cython implementation. Even if Python is your daily driver or your first love, you should definitely consider these as viable alternatives when you are about to embark on a new project, particularly when this project calls for snappy, performant execution times.

But what about existing codebases which are – either by conscious choice, or lack thereof – already written in Python?

Is it time to ditch Python?

Here at Vortexa, we are by no means a ‘Python first’ company. Instead we tend to adhere to the principle of attempting to use the right tool for the right job. Nevertheless, Python is a prominent feature in our production codebase, largely because a significant proportion of what we do, at its very core, is the processing and modelling of complex real-world data; and when it comes to this domain, very few languages offer as complete of an ecosystem and toolkit as Python does.

Data processing systems and pipelines are increasingly being faced with the demands of an ever-growing body of available data, as well as the rising desire for real-time analytics. As a result, these systems need to be able to crunch a larger volume of data, as well as being able to do so rapidly, and frequently. Every language has its strengths and weaknesses, and as we have already discussed, despite its ease of use and a rich ecosystem of well-built tools, Python’s Achilles-heel, more often than not, is its runtime performance. In the face of this, we as engineers are forced to ask ourselves whether we should abandon some, or all, of our Python code, which may not perform as well as other languages could, in favour of a new language.

Joel Spolsky (co-founder and former CEO of StackOverflow) published a wildly popular blog post titled Thing You Should Never Do, Part 1. In this, he made an impassioned argument against the very idea of scrapping existing systems and re-writing them from scratch. Amongst other things, he made the following arguments.

“When optimising for performance 1% of the work gets you 99% of the bang” – This is essentially a more extreme manifestation of Pareto’s Principle, and a common theme in software engineering; the parts of our code, responsible for causing performance bottlenecks, tend to make up a minute fraction of the actual codebase. Moving an entire project into a new language, in order to alleviate the issues arising from such a small fraction of its code, requires understanding, translating, and re-writing the entire codebase. This task alone can incur a significant time commitment for a dev team.

Understanding the what does not necessarily mean you understand the why – In what can be considered a real-life example of the Lindy Effect, code which has been around for a long time (particularly in production), tends to accrue significant latent knowledge and functionality. When trying to move this code, it is only natural to attempt to streamline and refactor snippets that look messy, or out of place. But what you will often find is that these odd-looking snippets of code are the results of critical bug fixes, or ‘hacks’ and monkey-patches, written in order to address idiosyncrasies in your data or infrastructure. Discovering these fixes the hard way can turn into a fatal time-sink.

Subsequently, Joel argues that methodical, incremental refactoring of existing code, is almost always preferable to restarting from scratch.

Final thoughts

Adding to Joel’s arguments, here a few final thoughts of ours to add in to the mix.

Why did you choose Python in the first place? – More often than not, when a project was first built in Python, it was done so for a reason. In Vortexa’s case, the prevalence of Python in our data processing and modelling code is, in large part, thanks to the power and variety of third-party tools available for the job; none more so than Pandas and NumPy.

These libraries have become the industry standard for data processing for a reason. They provide APIs and well-thought-out functionality which allow developers to swiftly interact with their data, and to apply a litany of transformations, aggregations, and visualisations while integrating seamlessly with a fantastic number of data-storage formats. Deserting these libraries for other languages means that a relatively standard ETL job, which can be written in tens of lines of Pandas code, can require hundreds of lines of new code (not to mention tests and adjusted deployment code), as well as an expensive mental paradigm shift.

There is one more question to ask yourself; is your Python code even as performant as it could be? Even in large systems, covering multiple modules and steps, the fundamental principles of algorithmic complexity still apply. Do you know if your algorithms are running in quadratic, logarithmic, linear, or constant time, and can you improve upon them? In a similar vein, can you alter how much data your code needs to process? Does it have to re-process every data point every time, or can you do delta calculations or incremental processing?

In summary, when you are starting a new project, ask yourself carefully where you want (or can afford) to have your overhead applied. Are runtime performance and developer control the top priorities for the code you are about to write? In which case, Python is probably not the right tool for the job. However, if your code needs to handle messy, frequently changing real-world data, and you can afford to sacrifice a bit of runtime performance in favour of flexible, highly accessible code with fast developer iteration times, then the likes of Python and Pandas should probably be your go-to.

Regardless of which choice you make, ensure that you allocate adequate thought before starting. Rewriting existing code from scratch, at a later date is a risky and time-consuming endeavour, and it can be hard to justify months of developer time being invested into such a task when the resulting output yields no new features or discernible improvements for the consumer.

If you already have a significant amount of code written in Pandas, stay tuned for the second part of this post, where we will speak about common performance issues, and how to address them.

For more insights into the tech behind Vortexa, subscribe to our vorTECHsa monthly newsletter and to our Medium page.